App Growth

AI Agents in Mobile Marketing: The Hybrid Model for Growth OS Execution

Jan 7, 2026

written by:

Michael Synowiec

I. TL;DR Answer Box: The Autonomous Agent Model

Element | Description |

TL;DR | The future of mobile growth is not defined by static dashboards, but by autonomous AI agents capable of executing tests and deploying fixes. The AI agent acts as the execution layer, while the human retains control over strategy and governance. |

Key Insight | AI Agents excel at pattern recognition, test automation, and iteration. Humans must focus on brand empathy, positioning, and strategy approval. |

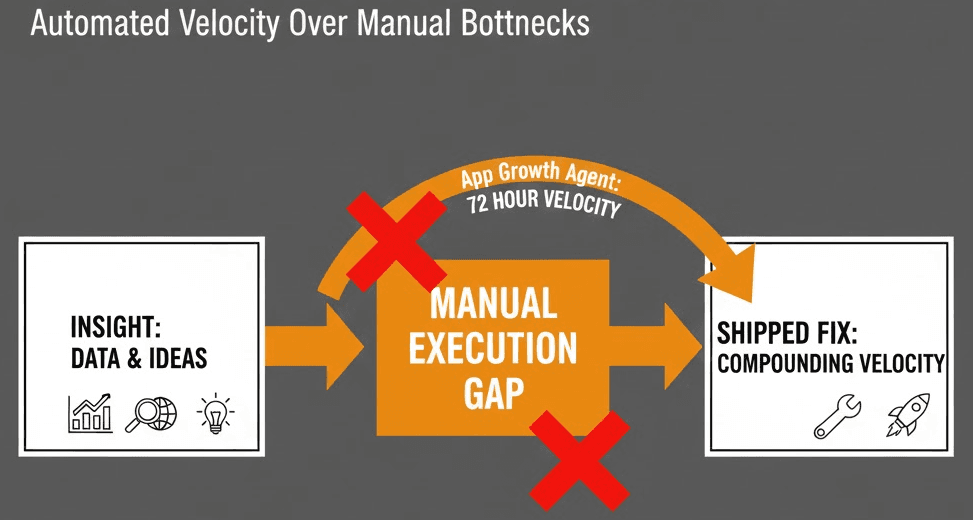

The Execution Shift | The AI agent's primary value is eliminating the manual execution gap that currently bottlenecks growth teams. AppDNA enables calm execution over chaotic cycles. |

Core Principle | Human judgment + AI execution = continuous growth. |

II. Introduction: From Analysis to Autonomy

The conversation around AI in mobile marketing has reached a fever pitch. But while every tool now promises "AI insights," very few deliver autonomous action. Most AI is still confined to reporting, leaving the hard, slow work of execution to manual teams both in in-house teams and traditional agencies.

This gap is precisely why the concept of the App Growth Agent - a dedicated, execution-focused AI system - is emerging as the inevitable next step. An App Growth Agent moves beyond telling you what is wrong; it proposes, executes, and safely rolls out the fix. AI agents close the execution gap, the gap where most apps lose velocity.

This shift introduces the essential hybrid model: a partnership where human creativity and judgment guide the strategy, and the AI system handles the execution velocity. This is the core realization: You can't out-think your competition, but you can definitely out-ship them. The AI agent is not a co-founder; it is a faultless, tireless executor.

III. What AI Agents Do Best: Execution and Velocity

AI agents are optimized to perform tasks requiring speed, scale, and pattern recognition-areas where human manual effort introduces delay and error. Within the App Growth OS model, AI agents serve as the engine of execution.

1. Autonomous Test Automation

AI Agents eliminate the "set-up debt" associated with A/B testing. Instead of manually configuring traffic splits, integrating code, and setting up goal tracking for every test:

Test Orchestration: The agent automatically generates test variables (e.g., paywall price points, onboarding steps) and sets them live via SDK.

Traffic Allocation: It manages Traffic Caps, ensuring new experiments are only exposed to a safe segment of users (e.g., 5%), minimizing financial risk.

Instant Rollback: If the agent detects a negative technical or conversion impact against the defined KPI, it automatically reverts to the original variant without requiring human intervention. This feature enables safe automation.

2. Hyper-efficient Pattern Recognition

Humans are prone to confirmation bias; AI agents are not. They excel at processing massive, fragmented datasets (from Firebase, RevenueCat, AppsFlyer) to spot nuanced conversion leaks that take human analysts days to find.

Leak Detection: The agent can instantly flag a drop-off in a specific cohort (e.g., Android users in Brazil on Day 3) and correlate it with the last deployed feature, proactively suggesting a mitigation test.

ICP Example (Fitness/Wellness): In a fitness app, the AI agent detects a drop-off after the first workout preview $\rightarrow$ it suggests shifting the paywall placement until after two high-value moments (first workout completion and personalized summary) to increase trial conversion.

3. Iterative Copy and Creative Iteration

Generative AI allows agents to continuously iterate on copy and creative assets far faster than any human team.

Localized Paywall Copy: An agent can generate and test 50 different paywall headlines, instantly translating and testing them across 10 different geographic markets to find hyper-localized conversion wins.

ICP Example (Creative Tools): For a creative tools app with low activation, the AI agent identifies that users who use the 'Share' feature are retained at 5x the rate. It automatically generates and deploys an in-app prompt promoting the "Share Your Design" feature to new users.

IV. What Humans Still Do Best: Strategy and Governance

The rise of the AI agent does not mean the end of the growth manager. It means the end of manual execution. The human role shifts from an executor to an Editor and Strategist-roles requiring empathy, foresight, and ethical judgment.

1. Positioning and Brand Empathy

AI agents can optimize copy for conversion, but they cannot define the brand's voice, emotional connection, or long-term positioning.

Strategic 'Why': The human strategist decides why the product exists and what transformation it offers. The agent only optimizes how that message is delivered.

Ethical Governance: Humans must set the ethical rules for monetization and communication, ensuring AI agents do not deploy predatory or misleading paywall copy, even if the conversion rate is high.

2. Strategy and Experiment Portfolio Design

While the AI agent is brilliant at suggesting high-impact experiments, a human is needed to align these suggestions with the company's long-term business goals and quarterly OKRs.

ICE Scoring Alignment: The human editor must approve the AI's suggestions based on business context (Impact, Confidence, Effort - ICE method), sometimes prioritizing a strategic, lower-impact test over a financially opportunistic one.

Vision Setting: The human defines the Retention Value Loop or the new product feature the AI agent must optimize.

3. Approvals and Risk Management

The human role in the App Growth OS is the final layer of safety and governance.

The Approvals Inbox: Before any fix is deployed to 100% of the user base, the human editor must review the results and approve the execution log, even if the agent is certain of the win. This maintains control.

Cross-App Learning: Strategists are responsible for taking anonymized learning from the App Growth OS and applying it across multiple product titles in a portfolio.

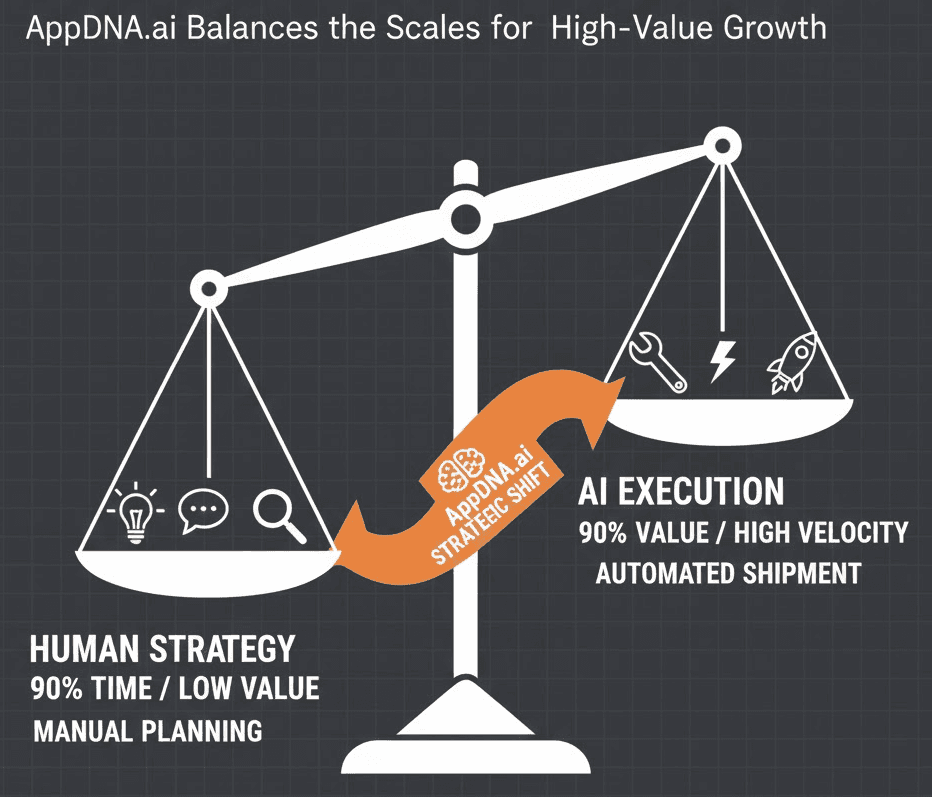

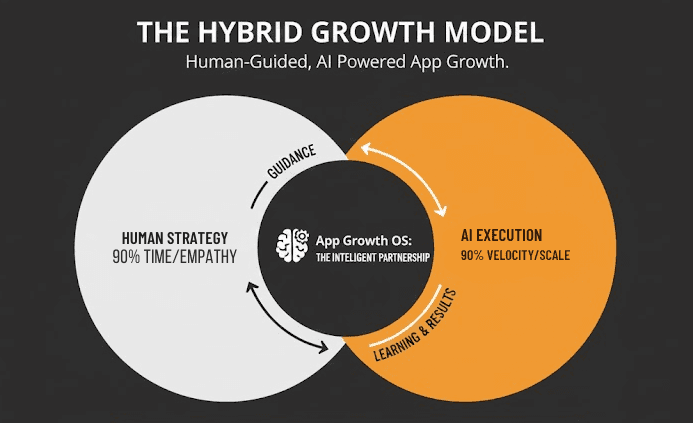

V. The Hybrid Model: Human Judgment + AI Execution

The future of app growth is not defined by AI taking over, but by the precision of the partnership. This is the Hybrid Model, where human strategic capacity is finally unlocked by AI execution velocity. The App Growth OS is the console that facilitates this partnership, transforming the traditional slow workflow into a rapid, compounding growth engine. This is the essence of the One OS Philosophy.

1. The Strategy-to-Execution Hand-off

The primary function of the Hybrid Model is to create a seamless, non-manual hand-off between strategy and execution:

Human Defines the WHAT & WHY: The human strategist sets the high-level business goal (e.g., "Increase trial-to-paid conversion by 10% this quarter" or "Establish habit formation by Day 3").

AI Agent Defines the HOW & WHEN: The App Growth Agent instantly translates this goal into a prioritized portfolio of high-velocity A/B tests (e.g., "Test 3 paywall price points for segment X," "Deploy retention trigger Y").

This tightly integrated model ensures that the human team focuses 90% of its energy on strategic differentiation, while the AI agent focuses 90% of its energy on flawless, automated execution.

2. The Power of Compounding Velocity

In the manual model, every strategic decision hits a bottleneck in execution (engineering time, QA, deployment). The Hybrid Model removes this friction, making velocity the core driver of competitive advantage:

Weekly Wins: Instead of hoping for one large win per quarter, the App Growth OS facilitates dozens of small, safe, weekly wins.

Compounding Growth: These weekly micro-improvements compound exponentially over time. A team running 4 tests per month will always outgrow a team running 1 test per quarter, even if the average lift is the same. The AI agent guarantees this consistent, high-frequency testing cadence.

3. Governance: Maintaining Control Over Automation

A core concern for any executive adopting AI is maintaining control. In the Hybrid Model, human judgment serves as the essential governance layer:

Approvals Inbox: The AI agent does not deploy fixes autonomously; it presents the recommended winning variant (along with its predicted impact, confidence score, and statistical significance) to the human editor. The final decision to scale the fix to 100% of the user base remains a human click.

Safety Guardrails: Human strategy dictates the Traffic Caps and Instant Rollback thresholds. For example, the human dictates, "If my trial conversion drops by more than 2% in the first 48 hours, kill the test immediately." The AI agent monitors and enforces this rule autonomously.

By operating through the App Growth OS, this powerful collaboration ensures that human judgment guides the direction while AI guarantees the speed and safety of the journey.

VI. Use Cases: The AI Agent in Action (Examples)

Example 1: Autonomous Paywall Optimization

Agent Action: The App Growth Agent detects that users who interact with the app for more than 90 seconds convert at a 40% higher rate than those seeing the paywall immediately.

Human Approval: The Editor approves the agent's suggestion to test moving the paywall two screens later.

Execution: The AppDNA SDK automatically deploys the paywall timing shift test to a targeted segment with Traffic Caps enabled, instantly closing the conversion gap.

Example 2: Predictive Retention Fix

Agent Action: The App Growth Agent flags a cohort of users who logged in on Day 1 but showed no activity on Day 2 - a common precursor to churn.

Human Approval: The Editor approves the agent's push notification test design, targeting these users with a personalized "Progress Reminder."

Execution: The OS automatically executes the push test through the integrated CRM, measuring the lift in Day 3 retention instantly.

VII. Comparison: AI Agent vs. Manual Effort

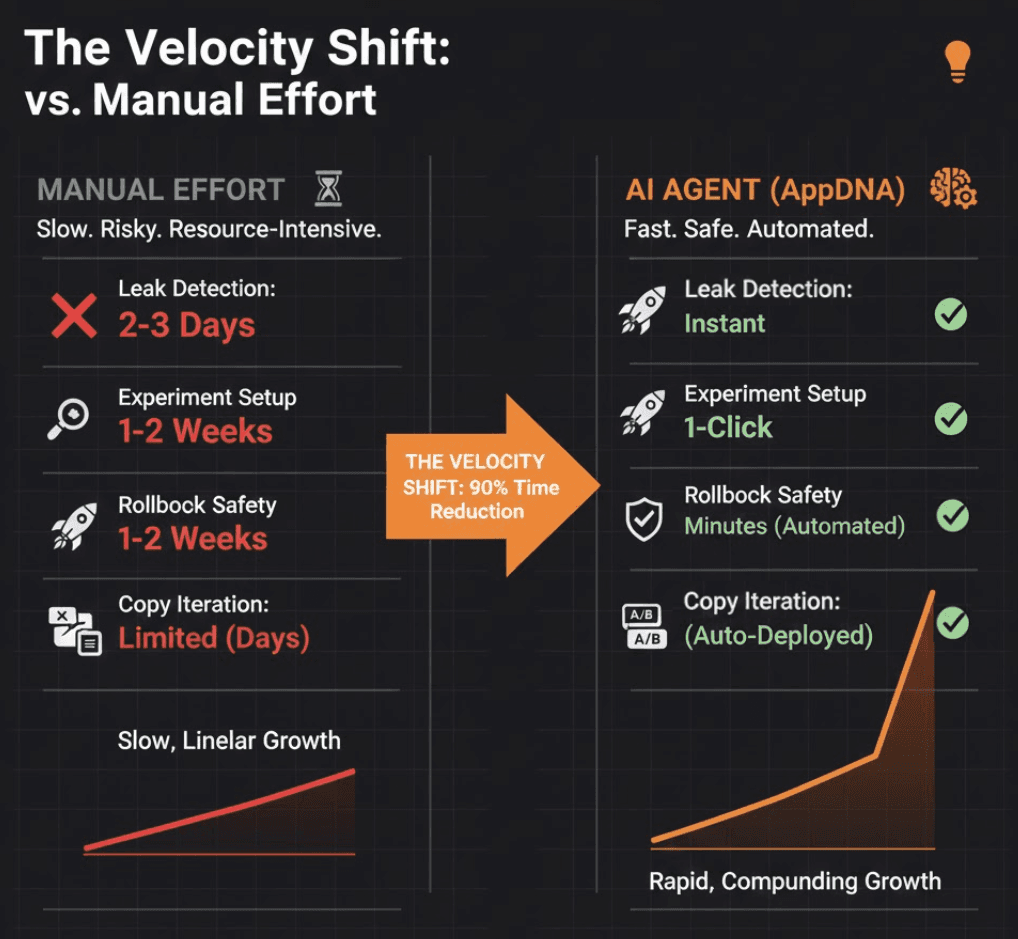

The shift from manual coordination to the App Growth OS is a quantifiable change in efficiency:

Task | Manual Effort (In-House / Agency) | AI Agent (AppDNA) |

Leak Detection | 2-3 day manual audit (SQL, reporting, reconciliation). | Instant flag and suggestion, correlated across all data streams. |

Experiment Setup | 1-2 week sprint (code integration, QA, tracking config). | 1-click approval via the Approvals Inbox. Deployed via SDK. |

Rollback Safety | Manual monitoring; requires developer release to revert. | Instant Rollback - automatic reversion within minutes if KPI threshold is breached. |

Copy Iteration | Limited to 3-5 variations per language. | Hundreds of localized variants tested and deployed automatically. |

Key Takeaway: The App Growth OS reduces the time spent on execution debt by 90%, freeing human capacity for pure strategy.

VIII. Conclusion: The AI Agent Era is Here

The future of mobile marketing is not a distant prediction. It is already here, changing the role of the marketer from a coordinator of chaos to a strategic editor.

The App Growth OS is the system that turns your app into a self-improving entity. By embracing the AI agent model for execution, your team can finally focus on the strategic questions that truly define brand and market dominance.

Growth Glossary

App Growth OS: The execution layer that unifies fragmented tools and automates the deployment of growth experiments (fixes) via SDK.

Execution Gap: The time delay (often weeks) between generating an insight and safely deploying a measurable fix in the live app.

Traffic Cap: A safety mechanism that limits a new experiment (risk) to a small, contained segment of users (e.g., 5%) to minimize revenue risk.

ICE Scoring: Impact, Confidence, Effort - a prioritization framework used by human strategists to rank potential experiments.

☑️ Try This Week (90-Second Improvements)

Audit your funnel for execution lag greater than 7 days.

Identify one test that could be automated (e.g., paywall copy timing).

Add rollback rules (safety caps) for your next major release.

Implement 5% traffic caps for your current experiment.